Is Airflow Truly Safe? ー With Reviews of Disclosed Vulnerabilities

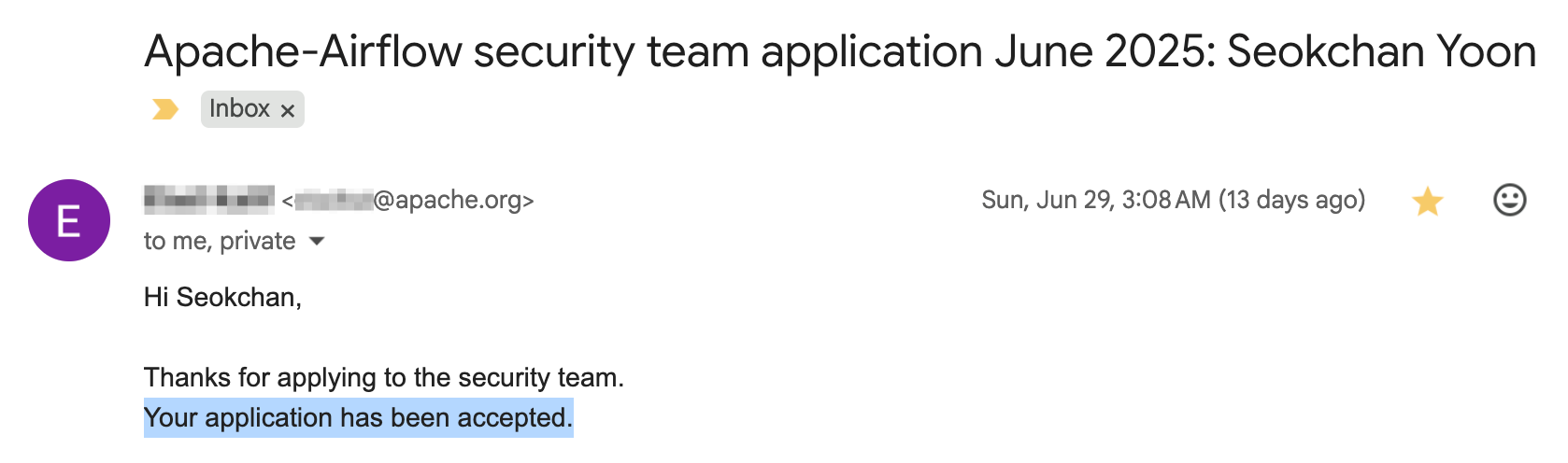

As an Apache Airflow Security Team member, I review three disclosed vulnerabilities to examine Airflow's security posture and what threats to prepare for.

Hello, this is Seokchan Yoon.

This July, I officially joined the Apache Airflow Security Team. For those unfamiliar, Airflow is an open-source workflow management platform managed by the Apache Software Foundation. It's a Python-based tool that has become popular in the data analytics field for building data pipelines.

To mark the occasion of joining the team, I wanted to write a blog post tackling the question: "Is Airflow truly secure?" This article covers much of the same material I presented at the recent Korea Apache Airflow 3rd Meetup. I hope this post helps those who have already adopted or are considering adopting Airflow to review its security aspects and understand what threats to prepare for.

I'll explain these security threats using three vulnerabilities as examples, out of four that I've reported to the Airflow team since last year, all of which have already been patched.

(As a side note, my work with the Airflow Security Team is on a volunteer basis. My day job is as a Security Engineer at zellic.io, a Web3 security audit firm.)

1. How Does Airflow Handle Security Threats?

First, let's look at how the Airflow team processes security vulnerabilities when they are discovered. The Airflow repository has a "Security" tab, which displays the contents of the .github/SECURITY.md file. Let's review it together.

What should (and should not) be reported as a vulnerability?

The team asks that people refrain from sending security reports unrelated to Airflow or generic, automated security emails. In particular, there are cases where the official Airflow Docker image might use a library with a known vulnerability because it hasn't been updated yet. The team appears to handle these versioning issues internally.

Excluding these cases, all other security threats should be sent to security@airflow.apache.org. After a review by the security team, they will be handled as official security vulnerabilities.

How should I report a vulnerability?

The Airflow team requests that each security threat be reported in a single, dedicated email thread. They also mention that it's better to explain the vulnerability in the body of the email rather than using attachments like PDFs or videos.

Is it really a security vulnerability?

In typical web services, a Remote Code Execution (RCE) vulnerability — where a hacker can execute arbitrary code on the server — is often the most critical type of bug. However, since Airflow is fundamentally a program designed to execute workflows and is built to restrict access from untrusted users, simply being able to "execute code" might not be considered a vulnerability.

Therefore, the team strongly recommends reading the Airflow Security Model document before reporting a potential vulnerability.

How is a vulnerability's severity measured?

A vulnerability's severity is assessed according to the Apache Foundation Security Team's risk assessment criteria, and the document notes that this may differ from universal standards.

For example, an XSS (Cross-Site Scripting) vulnerability is typically rated as medium severity, but in Airflow's case, it might be rated as low depending on the context.

What happens after I submit a report?

Within a few days of sending the email, you should receive a reply from the security team indicating whether your report has been validated as a security vulnerability. If it is, a CVE number will be issued, and the vulnerability will be publicly disclosed via the announce@apache.org mailing list after a patched version is released.

The document also notes that because the security team is composed of volunteers, responses and patch times may be slow.

Do vulnerabilities in Airflow Providers affect the core package?

Airflow has plugin-like packages called Providers. Vulnerabilities found in a specific Provider are independent of the core package. The document states that if the Provider is not an official one, the responsibility for handling the vulnerability lies with the Provider's maintainer.

2. Airflow's Security Model

To properly understand security threats in Airflow, you first need to understand the security model it was designed with. Airflow has a unique security model, different from typical web services, and all vulnerabilities are evaluated against this model.

The core of the Airflow security model is answering the question: "Who do we trust, and who do we not trust?" The security model document defines four types of user groups.

- Deployment Manager: The top-level administrator responsible for the installation, configuration, and overall infrastructure of Airflow. They have control over the

airflow.cfgfile, OS-level access, and the environment where Airflow runs (servers, containers, etc.). They bear the final responsibility for Airflow's security, including deciding and controlling who can be a DAG Author. Think of this role as being similar torootin Linux. - DAG Author: A trusted user who can write, modify, and delete DAG code. Since the code they write is executed on Worker and Triggerer nodes, they effectively have shell access to those nodes. However, their ability to execute code on other nodes (like the webserver and scheduler) is restricted. They can also access credentials for external systems stored in Airflow Connections. You can think of this role as a standard user in Linux.

- Authenticated UI Users: Users who log in to the Airflow web UI and API. Their permissions are strictly limited based on predefined roles like Admin, User, and Viewer. For example, a Viewer can only read DAGs and logs but cannot execute them. Even the highest Admin role is different from a DAG Author; while they can control all UI functions, they should not be able to execute arbitrary code on the system. This is similar to a

www-datauser in Linux. - Non-Authenticated UI Users: Unauthenticated users who can only perform limited actions. By default, they can only access the login page and the health check endpoint.

A DAG (Directed Acyclic Graph) is the Python code that defines a workflow, which is the unit of code execution in the Airflow system.

Airflow is designed on the premise that DAG Authors are trusted users. This means the system assumes that anyone who can write and deploy DAG code is trustworthy and will not act maliciously.

Airflow was not built to be a multi-tenant environment where multiple untrusted users can execute code. It was designed for a small number of trusted data engineers or developers to manage workflows as code.

Therefore, permission to add code to the DAGs folder (i.e., DAG Author privileges) is treated as being virtually equivalent to full control over certain Airflow components (Worker and Triggerer nodes). It is an intended feature, not a bug, for a DAG Author to be able to read the file system (e.g., /etc/passwd, /etc/hosts) or call external libraries using Python code.

A situation becomes a security threat only when a DAG Author can exceed their intended permissions and perform actions reserved for a Deployment Manager.

3. What Have Vulnerability Trends Looked Like in the Last Two Years?

You can view all publicly disclosed vulnerabilities in Apache Airflow through a service like Snyk:

Analyzing the vulnerabilities disclosed over the last two years reveals a few distinct patterns. Most severe vulnerabilities are not exploitable by anonymous users but by authenticated users, particularly those with DAG Author privileges.

1. Remote Code Execution (RCE) via DAG Authors

The most prominent and high-impact type of vulnerability is when a DAG Author leverages their permissions to take over the entire system. As mentioned, since writing and executing DAGs is Airflow's core function, DAG Authors are granted high levels of privilege, which can sometimes lead to vulnerabilities.

- CVE-2024-39877: DAG Author Code Execution possibility in airflow-scheduler

This is a bug I discovered and reported. It was a privilege escalation vulnerability where a DAG Author could insert malicious Jinja2 template code into the doc_md parameter of a DAG object. This allowed them to execute arbitrary code on a node where code execution should be restricted (the scheduler).

# airflow/models/dag.py (in vulnerable version)

def get_doc_md(self) -> str | None:

# ...

if not doc_md.endswith(".md"): # render with Jinja2 when doc_md doesn't end with ".md"

template = jinja2.Template(doc_md)

return template.render() # render without any sanitizations

# ...The root cause was the get_doc_md() function within the DAG object's __init__(). As seen in the code, if the doc_md parameter value did not end with .md, the input was passed directly to a Jinja2 template for rendering without validation. This allowed an attacker with write access to the DAGs folder to abuse Jinja2's templating features to execute Python code on the scheduler node.

# dags/malicious_dag.py

from airflow.models.dag import DAG

with DAG(

dag_id='ssti_poc',

# doc_md with malicious Jinja2 based template

doc_md="{{ ''.__class__.__mro__[-1].__subclasses__()[138] ._globals_['_builtins_']['__import__']('subprocess').check_output('hostname') }}"

) as dag:

# ...- CVE-2024-45034: Authenticated DAG authors could execute code on scheduler nodes

This was another critical privilege escalation vulnerability I found. A DAG author could upload a specially named configuration file, airflow_local_settings.py, to the DAGs folder, enabling them to execute arbitrary code on all Airflow components, including the Webserver and Scheduler.

This happened because Airflow adds the DAGs folder to sys.path on startup and then automatically imports that file.When Airflow starts, it calls the initialize() function in airflow/settings.py.

# airflow/settings.py (in vulnerable version)

def prepare_syspath():

if DAGS_FOLDER not in sys.path:

sys.path.append(DAGS_FOLDER) # 1. Add DAGS_FOLDER to sys.path

def import_local_settings():

try:

import airflow_local_settings # 2. Try to import 'airflow_local_settings' module

except ImportError:

pass

def initialize():

configure_vars()

prepare_syspath()

import_local_settings() # 3. Call import_local_settings() when it initialized

# ...

initialize()This function first calls prepare_syspath() to add the DAGs folder (DAGS_FOLDER) to Python's sys.path. Immediately after, import_local_settings() is executed, which imports a module named airflow_local_settings. Since the DAGs folder is in sys.path, if an attacker places an airflow_local_settings.py file there, every Airflow process will import it and execute the malicious code.

These vulnerabilities show that a lack of clear separation between the DAG authoring environment and the Airflow system execution environment can lead to serious problems. A malicious insider or an external attacker who has compromised a DAG Author account could take over the entire workflow system.

2. Provider and Plugin-Based Vulnerabilities

Vulnerabilities are also found in features extended through Providers.

Airflow's functionality can be expanded with extension programs called Providers, and these extensions themselves can contain vulnerabilities. A Provider vulnerability doesn't directly affect the core package, but it can be critical for any environment that uses it.

- CVE-2025-30473: SQL Injection in Common SQL Provider.

- This vulnerability allowed malicious SQL statements to be executed through options in certain operators, like the

SQLTableCheckOperator.

- This vulnerability allowed malicious SQL statements to be executed through options in certain operators, like the

- CVE-2024-39863: XSS via Provider description field.

This was a vulnerability I found, caused by a lack of validation on the Provider's description field. If an administrator installed a maliciously crafted Provider, a script could be executed in the browsers of other users accessing the UI.

The UI page that lists providers used the following regex to parse the description field:

`(.*) [\s+]+<(.*)>`__Airflow uses this regex to extract a title and URL from strings formatted like `example.com <https://example.com>`__ to create an HTML link like <a href='https://example.com'>example.com</a>. However, the code implementing this feature did not validate the URL's scheme. This meant that malicious schemes like javascript:prompt(1) were inserted directly into the <a href="..."> tag, creating an XSS vulnerability.

3. Sensitive Information Exposure

Beyond remote code execution, another type of vulnerability involves the exposure of sensitive information during workflow execution.

- CVE-2024-45784: Sensitive configuration values are not masked in the logs by default

- This vulnerability could cause critical data or credentials to be written in plain text to Task logs during a workflow run. This could expose key information about the data pipeline to unauthorized users.

Conclusion: So, Is Airflow Secure?

Based on the vulnerabilities analyzed in this post, we can draw a few conclusions.

1. The biggest threat comes from the malicious actions of a "trusted" DAG Author.

The likelihood of an unauthenticated external user compromising the system is relatively low. However, a malicious insider with DAG authoring privileges—or an attacker who has stolen their credentials—can pose a serious threat to the entire system.

2. Separating the DAG authoring environment from the Airflow execution environment is critical.

The official docker-compose.yaml file is configured to run all nodes on a single instance for development convenience. However, this is not a security best practice, a fact the Airflow team acknowledges. A setup based on the official docker-compose.yml file creates the possibility for a DAG author to exceed their intended permissions. An architecture that clearly separates the execution scope of each component is necessary for a secure production environment.

But that said, if you can FULLY TRUST your DAG authors, setting up an Airflow environment with the official docker-compose.yml file may be an acceptable trade-off between security and convenience.

3. A continuous update strategy is essential.

As an open-source project, new vulnerabilities will continue to be discovered. Having a stable update process tailored to your team's environment is the most reliable way to maintain security.

In conclusion, while Airflow itself has a robust security model, its actual security level depends heavily on how the system is configured and managed in accordance with that model. The core of Airflow security is clearly controlling who can be a DAG Author and what environment they write and execute code in.